Inference with Claudie

In this blog post, we’ll walk through how to connect an on-premise server, equipped with custom hardware, with the AWS cloud provider, forming a hybrid cluster, and further use this infrastructure for running an AI workload.

Our experience running an AI workload in Kubernetes - Part 4 <em>The Scaling Challenges</em>

In the previous part of this series, we walked through our migration from the RayCluster CRD to the RayService CRD. To complete the picture, this post covers the challenges we’ve faced and the improvements made to our setup running in a cost-optimized multi-cloud Kubernetes cluster.

Our experience running an AI workload in Kubernetes – Part 3 <em>Migration to RayService</em>

Brief outages caused by Ray head node restarts were no longer acceptable. In this post, we dive into our migration from the RayCluster CRD to the RayService CRD, which enabled rolling updates, external GCS storage, and more. We share how we tackled challenges such as unpredictable deployments, slow Ray worker nodes start-up, and ensuring high availability with Dragonfly. If you want to understand how to make Ray workloads more resilient, predictable, and production-ready on Kubernetes, this post walks through our practical solutions and lessons learned.

Our experience running an AI workload in Kubernetes – Part 2 <em>Limitations & Pitfalls of our solution with RayCluster CRD</em>

In this part of our series, we share the challenges we faced running Ray Serve Deployments in production using the RayCluster CRD. Along the way, we tackled issues like ephemeral head nodes, RayCluster’s autoscaling quirks, and the limitations of rolling updates. If you’re curious about bridging the gap between traditional Kubernetes workloads and the unique demands of AI applications on Ray, this post dives deep into using the RayCluster CRD in K8s.

Our experience running an AI workload in Kubernetes – Part 1 <em>Lift & Shift Ray applications to K8s</em>

In this post, we share our hands-on experience helping our client, Mixedbread, run their AI applications on Kubernetes using the KubeRay Operator. During the migration from a hyperscaler to a multi-cloud environment powered by claudie.io, we cut infrastructure costs by 70% while tackling challenges around RayCluster resilience, Ray Serve Deployments.

Exploring Multi-Tenancy Solutions for my Kubernetes Learning Platform

Introduction Multi-tenancy in Kubernetes presents various complex challenges, including security, fairness, and resource allocation. This blog discusses the challenges associated with multi-tenancy and the technology choices made for a Kubernetes-based …

A different method to debug Kubernetes Pods

By Adam Stawski 15 August 2023. In this blog, I will demonstrate a step-by-step guide on how to access a running Kubernetes Pod by examining its namespace.

Evaluating etcd’s performance in multi-cloud

By Adam Stawski 12 May 2023 1. Intro Many companies are focusing on making their workload as highly available as possible. The intention behind moving their workloads from local datacenters

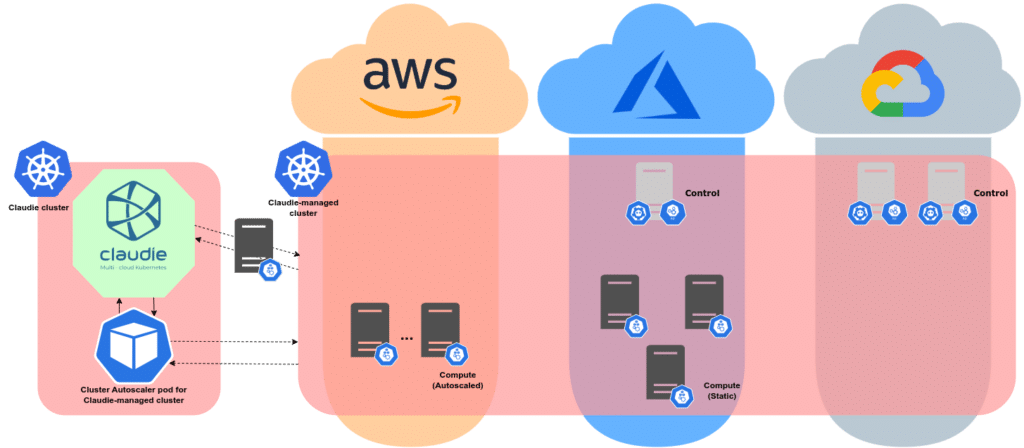

Introducing Cluster Autoscaler into Claudie

By Miroslav Repka 02 May 2023 Excitingly, Claudie has recently introduced integration with Cluster Autoscaler, providing Claudie users with full functionality of the autoscaler across any cloud or mixture of

Cloud-agnostic Kubernetes Clusters

By Bernard Halas 23 November 2022 Intro Kubernetes is often referred to as “the operating system of the cloud”. It gives freedom to build feature-rich platforms for operating your application stacks.

Traffic Encryption Performance in Kubernetes Clusters

By Samuel Stolicny January 18, 2021 Building a hybrid Kubernetes cluster among various environments (public providers and on-premise devices) requires a layer of reliable and secure network connectivity. Choosing the …